Inferring Data

This action is inspired from the book Surveillance Capitalism by Shoshana Zuboff.

Keywords: Data Exhaust, Behavioral Surplus, Behavioral Conditioning

After visualizing and annotating the consent forms Pinterest and WhatsApp in an attempt to provide users an agency to understand their data and how it is used, I realized that consent forms are no good to do this.

To understand how data is used once it has been shared, I started reading (I still am ) Surveillance Capitalism by Shoshana Zuboff. So far the book has introduced me to the concept of Data Exhaust, Behavioral Surplus and Behavioral Conditioning. As Zuboff puts it, ‘Content is a source of behavioral surplus, as is the behavior of the people who provide the content, as are their patterns of connections, communication and mobility, their thoughts and feelings, and the meta-data, expressed in their emoticons, exclamation points, lists, contractions and salutations.‘

Scrolling through my LinkedIn feed, I notice that each post has a tag on top displaying which of my connections has interacted with that post. Taking the last quote from Zuboff as the basis, I started making a list of all these tags and started referencing them to each of my connections.

Doing this I could see that, interactions with posts match each connection’s profile, after a few posts it was easy to predict the kind of post that would see interactions from these connections. I also was able to take note that not all of my connections had been recently active on LinkedIn. At this point I realized that going forward with this action I might require a REB approval and did not continue with it.

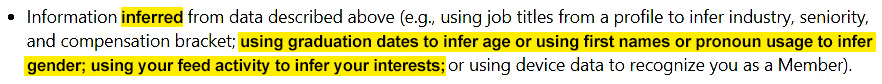

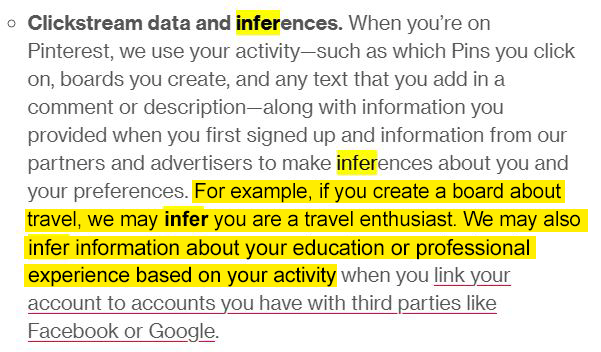

Going back to the consent forms of LinkedIn and Pinterest, I did a quick search for the words, infer, inferring & inferred.

Looking up 'infer' on consent forms of LinkedIn and PinterestThe results that showed up reinforced the fact that these platforms do make assumptions about their users based on the data they already have and by taking note of the patterns in which users interact with their platforms.

When you already have so much data about someone, it is easy to predict and assume their behavior in the future.

Moving from the last action to this one, I have realized that consent in data is at a level inaccessible to the users and moving forward I would like to look for ways in which design can reveal where inferences exist for the benefit of users rather than for the benefit of corporations?